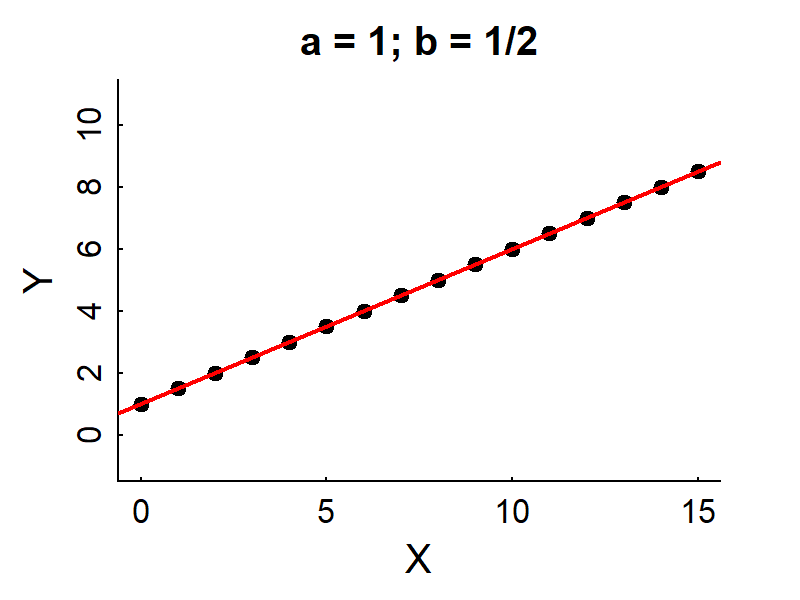

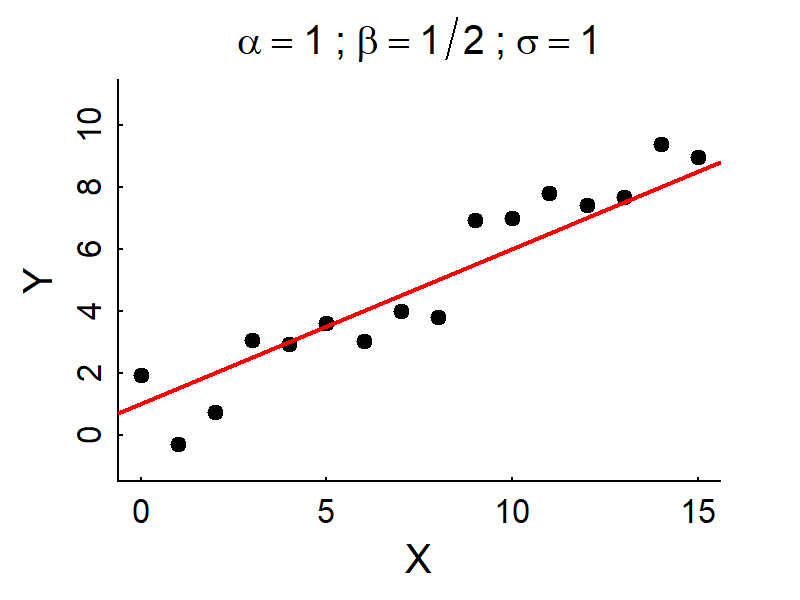

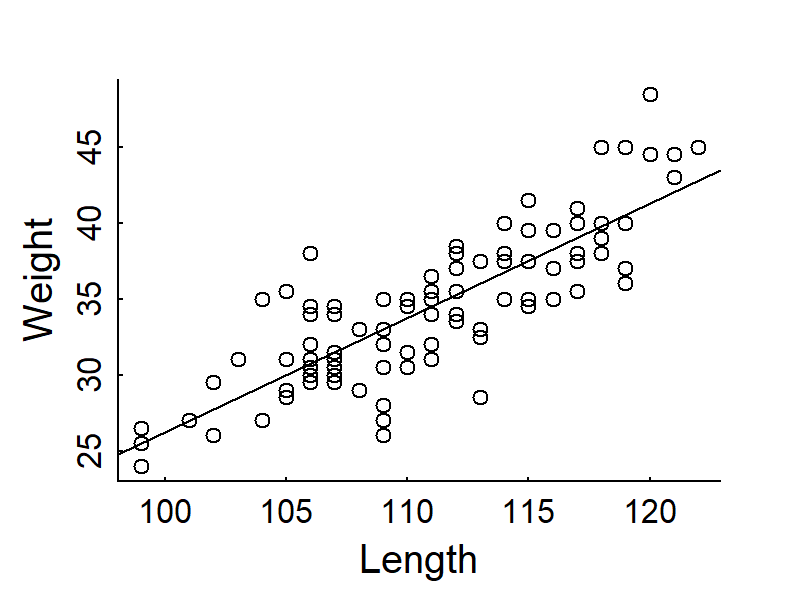

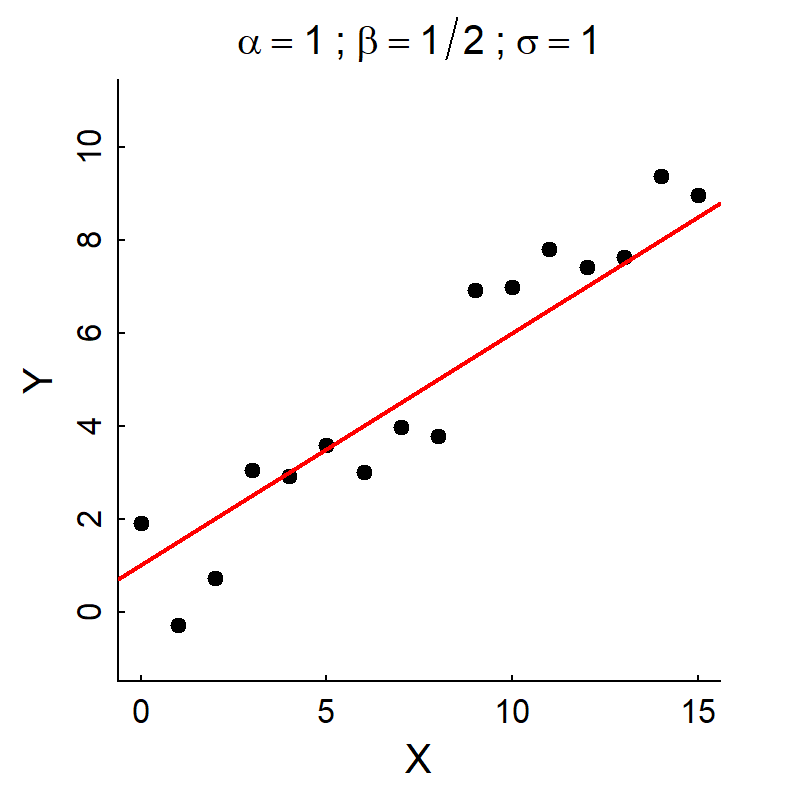

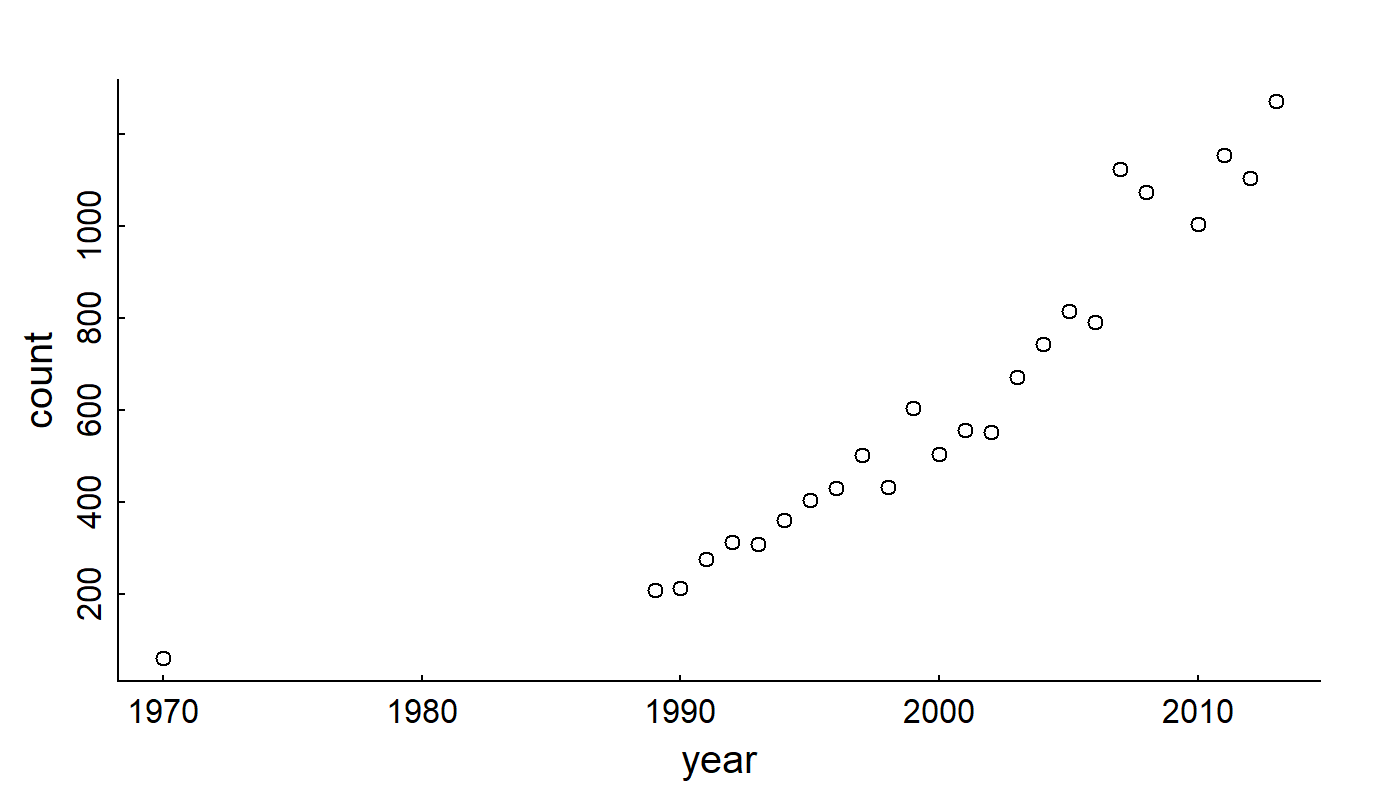

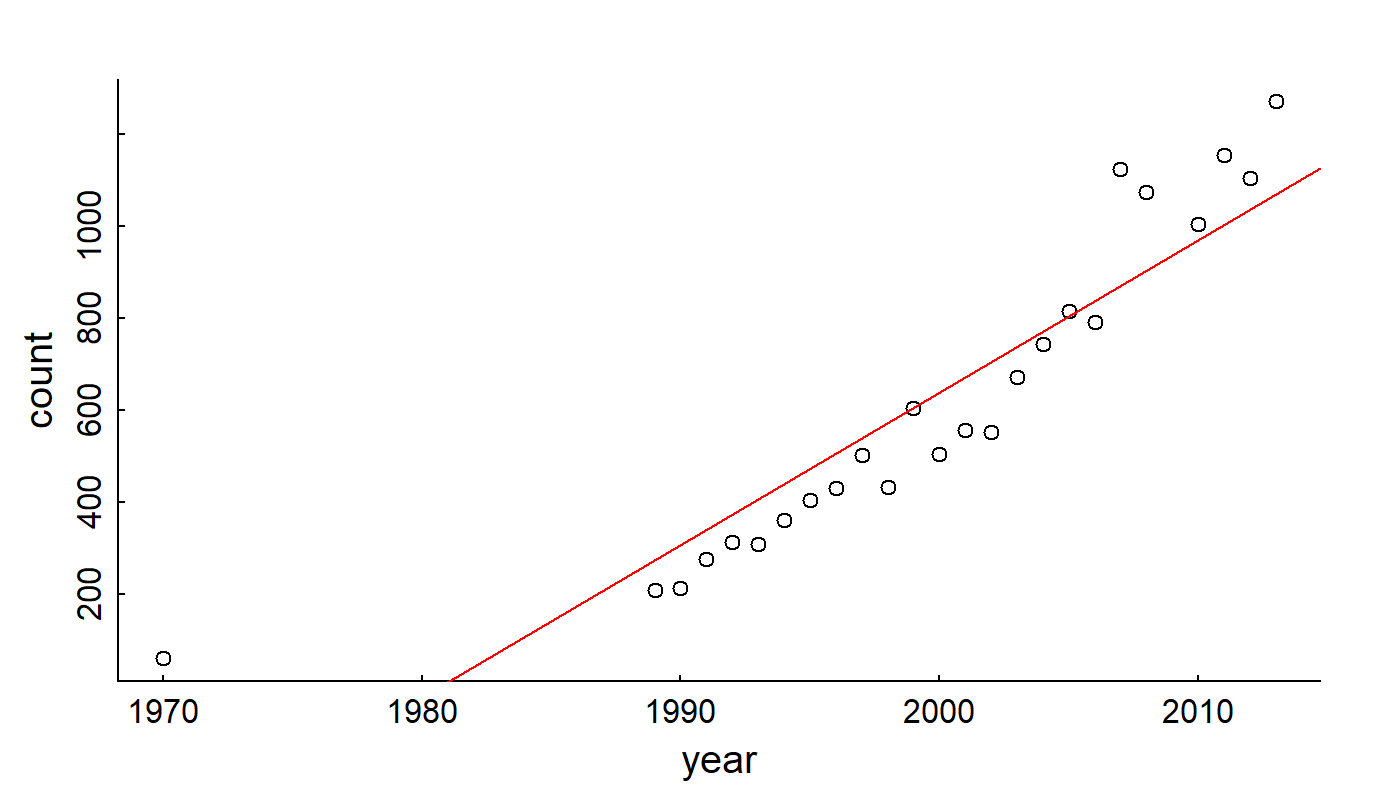

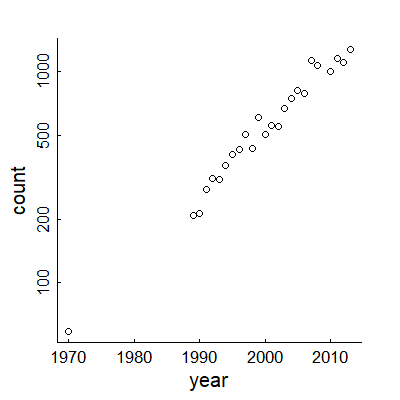

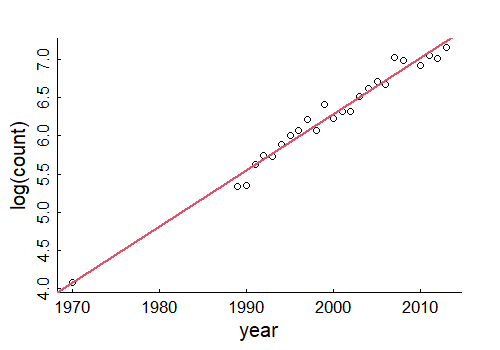

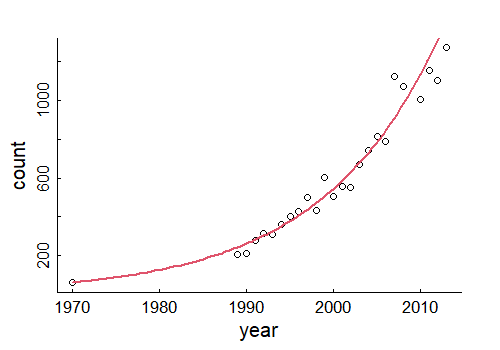

class: center, top, title-slide .title[ # Exponential Growth Part II: <strong>Linear</strong> models of <strong>exponential</strong> growth ] .subtitle[ ## <a href="https://eligurarie.github.io/EFB370/">EFB 370: Population Ecology</a> ] .author[ ### <strong>Dr. Gurarie</strong> ] .date[ ### <strong>February 14, 2024</strong> ] --- ## **Steller sea lion** (*Eumatopias jubatus*) - birth .center[ <iframe src="https://drive.google.com/file/d/1BP1FS4736pwUWYtNzSFT-tDlMgPdTi9u/preview" width="640" height="480" allow="autoplay"></iframe> ] --- # Linear modeling .small[(aka ***REGRESSION***, except I really don't like that term, for a variety of reasons to discuss in class.)] is a very general method to quantifying relationships among variables. .pull-left-60[ <img src="LinearModelsForExponentialGrowth_files/figure-html/unnamed-chunk-2-1.png" width="80%" /> ] .pull-right-30[  ] --- ## Linear Models .pull-left[ **Deterministic:** `$$Y_i = a + bX_i$$` `\(a\)` - intercept; `\(b\)` - slope <!-- --> ] .pull-right[ **Statistical:** `$$Y_i = \alpha + \beta X_i + \epsilon_i$$` `\(\alpha\)` - intercept; `\(\beta\)` - slope; `\(\epsilon\)` - **randomness!** `$$\epsilon_i \sim {\cal N}(0, \sigma)$$` <!-- --> ] --- .pull-left[ # Fitting models is easy in ! **Point Estimate** This command fits a model: .small[ ```r lm(Weight ~ Length, data = pups) ``` ``` ## ## Call: ## lm(formula = Weight ~ Length, data = pups) ## ## Coefficients: ## (Intercept) Length ## -49.1422 0.7535 ``` ] So for **each 1 cm** of length, add another **754 grams**. ] .pull-right[ ```r plot(Weight ~ Length, data = pups) abline(my_model) ``` <!-- --> The `abline` puts a line, with intercept `a` and slope `b` onto a figure. ] --- ## Some comments on linear models .pull-left[ $$ Y_i \sim \alpha + \beta X_i + \epsilon_i$$ 1. <font color = "red"> `\(\huge \epsilon_i\)` </font> is .darkblue[**unexplained variation**] or .darkblue[**residual variance**]. It is often (*erroneously*, IMO) referred to as .red["**error**"]. It is a **random variable**, NOT a **parameter** or **data**. 3. <font color = "red"> `\(\huge \alpha+\beta X_i\)` </font> is the .darkblue[**predictor**], or the .darkblue[**"modeled"**] portion. There can be any number of variables in the **predictor** and they can have different powers, so: `$$Y_i \sim {\cal N}(\alpha + \beta X_i + \gamma Z_i + \delta X_i^2 + \nu X_i Z_i, \sigma )$$` is also a **linear** model. <!-- 2. A **better**, more sophisticated way to think of this model is not to focus on isolating the residual variance, but that the whole process is a random variable: `$$Y_i \sim {\cal N}(\alpha + \beta X_i, \sigma)$$` This is better because: (a) the three parameters ($\alpha, \beta, \sigma$) are more clearly visible, (b) it can be "generalized". For example the **Normal distribution** can be a **Bernoulli distribution** (for binary data), or a **Poisson distribution** for count data, etc. --> ] .pull-right[ <!-- --> ] --- .pull-left-60[ # Statistical inference **Statistical inference** is the *science / art* of observings *something* from a **portion of a population** and making statements about the **entire population**. In practice - this is done by taking **data** and **estimating parameters** of a **model**. (This is also called *fitting* a model). Two related goals: 1. obtaining a **point estimate** and a **confidence interval** (precision) of the parameter estimate. 2. Assessing whether particular (combinations of) factors, i.e. **models**, provide any **explanatory power**. This is (almost always) done using **Maximum Likelihood Estimation**, i.e. an algorithm searches through possible values of the parameters that make the model **MOST LIKELY** (have the highest probability) given the data. ] .pull-right-40[  .small[Another gratuitous sea lion picture.] ] --- .pull-left-60[ ## Statistical output <font size="4"><pre> ``` ## ## Call: ## lm(formula = Weight ~ Length, data = pups %>% subset(Island == ## "Raykoke")) ## ## Residuals: ## Min 1Q Median 3Q Max ## -7.498 -1.718 0.023 1.764 7.276 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -49.14222 5.75796 -8.535 1.81e-13 *** ## Length 0.75345 0.05193 14.510 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.761 on 98 degrees of freedom ## Multiple R-squared: 0.6824, Adjusted R-squared: 0.6791 ## F-statistic: 210.5 on 1 and 98 DF, p-value: < 2.2e-16 ``` </pre></font> ] -- .pull-right-40[ ### 1. Point estimates and confidence intervals .red.center[ **Intercept** ( `\(\alpha\)` ): `\(-49.14 \pm 11.5\)` **Slope** ( `\(\beta\)` ): `\(0.75 \pm 0.104\)` ] ### 2. Is the model a good one? *p*-values are very very small, in particular for **slope** Proportion of variance explained is high: .blue.large[$$R^2 = 0.68$$] ] --- .pull-left-60[ ## Statistical output <font size="4"><pre> ``` ## ## Call: ## lm(formula = Weight ~ Length, data = pups %>% subset(Island == ## "Raykoke")) ## ## Residuals: ## Min 1Q Median 3Q Max ## -7.498 -1.718 0.023 1.764 7.276 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -49.14222 5.75796 -8.535 1.81e-13 *** ## Length 0.75345 0.05193 14.510 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.761 on 98 degrees of freedom ## Multiple R-squared: 0.6824, Adjusted R-squared: 0.6791 ## F-statistic: 210.5 on 1 and 98 DF, p-value: < 2.2e-16 ``` </pre></font> ] .pull-right-40[ ### Interpreting statistical results The "standard error" around the **Length** factor is 0.05. The "true value" lies within **TWO** standard errors of the **point estimate** with 95% probability. So the estimate of the slope with **confidence interval** is (in g/cm): `\(\widehat{\beta} = 754 \,g/cm \pm 104\)` The `\(p\)`-value around the **Length** factor is `\(<2 \times 10^{-16}\)` .. i.e. **0** This says that there is NO chance that you would get this steep a slope if there were NO relationship between Length and Weight (the null hypothesis). So we've performed both **estimation** and **hypothesis testing** with this model. ] --- ### Models and Hypotheses > .large[**Every *p*-value is a Hypothesis test.**] .center[ <table class="table" style="margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:right;"> Estimate </th> <th style="text-align:right;"> Std. Error </th> <th style="text-align:right;"> t value </th> <th style="text-align:right;"> Pr(>|t|) </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> (Intercept) </td> <td style="text-align:right;"> -49.142 </td> <td style="text-align:right;"> 5.758 </td> <td style="text-align:right;"> -8.535 </td> <td style="text-align:right;"> 0 </td> </tr> <tr> <td style="text-align:left;"> Length </td> <td style="text-align:right;"> 0.753 </td> <td style="text-align:right;"> 0.052 </td> <td style="text-align:right;"> 14.510 </td> <td style="text-align:right;"> 0 </td> </tr> </tbody> </table> ] .large[ - First hypothesis test: `\(H_0\)` .darkred[intercept = 0] - Second hypothesis: `\(H_0\)` .blue[slope = 0] Both null-hypotheses strongly rejected. ] --- class: bottom .pull-left[ ## WA sea otter data: .footnote[Source: https://wdfw.wa.gov/species-habitats/species/enhydra-lutris-kenyoni] ```r WA <- read.csv("data/WA_SeaOtters_PopGrowth.csv") plot(WA) ``` <!-- --> ] .pull-right[ ## Fit a linear model .center[ ```r WA_lm <- lm(count ~ year, data = WA) plot(WA); abline(WA_lm, col = "red") ``` <!-- --> ]] .center[**What are some problems with this model1?**] --- ## Plot on Log scale: Much more linear looking! .pull-left[ ```r plot(WA, log = "y") ``` <!-- --> ] .pull-right[ ### Linear model of *log(count)* ```r logWA_lm <- lm(log(count) ~ year, data = WA) logWA_lm ``` ``` ## ## Call: ## lm(formula = log(count) ~ year, data = WA) ## ## Coefficients: ## (Intercept) year ## -140.22274 0.07325 ``` ] --- .pull-left[ ### Linear model of *log(count)* ```r logWA_lm <- lm(log(count) ~ year, data = WA) logWA_lm ``` ``` ## ## Call: ## lm(formula = log(count) ~ year, data = WA) ## ## Coefficients: ## (Intercept) year ## -140.22274 0.07325 ``` ] .pull-right[ ### A little math: `$$\log(N_i) = \alpha + \beta \, Y_i$$` `$$N_i = \exp(\alpha) \times \exp(\beta \, Y_i)$$` `$$N_i = e^\alpha {e^\beta}^{Y_i}$$` `$$N_i = N_0 \lambda ^ {Y_i}$$` `$$\lambda = e^{\beta} = e^{0.07325} = 1.076$$` ] > SO ... percent rate of growth is about 7.6%. --- .pull-left[ ## Plot linear model fit ```r plot(log(count)~year, data = WA) abline(lm(log(count)~year, data = WA), col = 2, lwd = 2) ``` <!-- --> ] .pull-right[ ## Plot exponential growth ```r plot(count~year, data = WA) curve(exp(-140.2 + 0.07325 * x), add = TRUE, col = 2, lwd = 2) ``` <!-- --> ] .center[Nice fit!] --- ## Summary stats and Confidence intervals .pull-left[ **Summary stats** ```r summary(logWA_lm) ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -140.2227 4.7318 -29.6344 0 ## year 0.0733 0.0024 30.9533 0 ``` ] .pull-right-40[ **95% confidence intervals** `$$\widehat{\beta} = 0.073 \pm 2\times{0.0024} = \{0.068, 0.078\}$$` `$$\widehat{\lambda} = \exp(0.073 \pm 2\times{0.0024}) = \{1.071, 1.081\}$$` So annual growth rate is `\(7.6\% \pm 0.5\)`, with 95% Confidence. ] > **Key takeaway:** With linear modeling we can use ALL the data to (a) get a great **point estimate** and (b) quantify **uncertainty** on that estimate. <!-- ## Remember *environmental* stochasticity? <div style="float:left; width: 50%;"> Typical growth model: `$$N(t) = N_0 e^{Rt}$$` where `$$R \sim {\cal N(\mu_r, \sigma_r)}$$` </div> <div style="float:right; width: 50%;"> Leads to something like: <img src="LinearModelsForExponentialGrowth_files/figure-html/unnamed-chunk-21-1.png" width="100%" /> </div> Important to remember: environmental stochasticity is relevant at ALL population sizes, in contrast to demographic stochasticity. ## Consider the discrete geometric growth equation: <div style="float:left; width: 50%;"> `$$N(t) = N_0 e^{Rt}$$` `$$\log N(t) = \log N_0 + Rt$$` `$$N_t = N_0 \lambda ^ t$$` `$$\log(N_t) = \log(N_0) + \log{\lambda} \, t$$` add some randomness .... `$$\log(N_t) = \log(N_0) + \log{\lambda} \, t + \epsilon_t$$` </div> <div style="float:right; width: 50%;"> You can estimate this with a **linear model** with the following equivalences: `$$Y_t = \log(N_t)$$` `$$\alpha = \log{N_0}$$` `$$\beta = \log(\lambda)$$` </div> -->