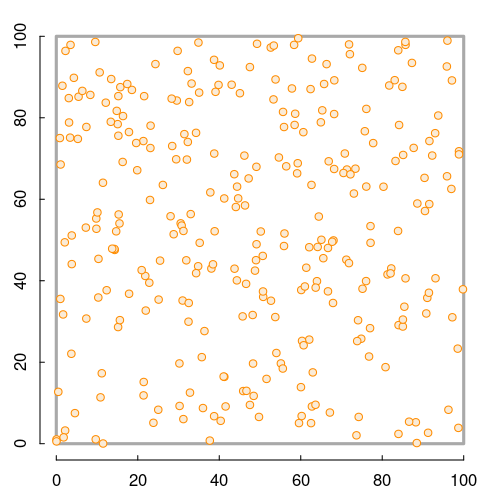

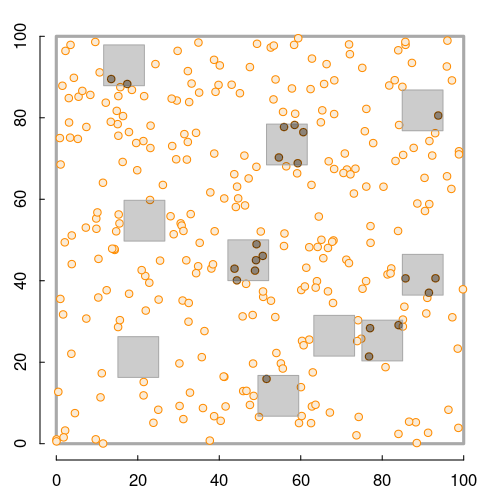

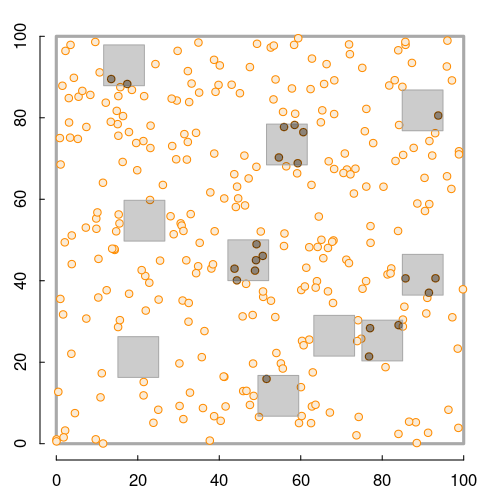

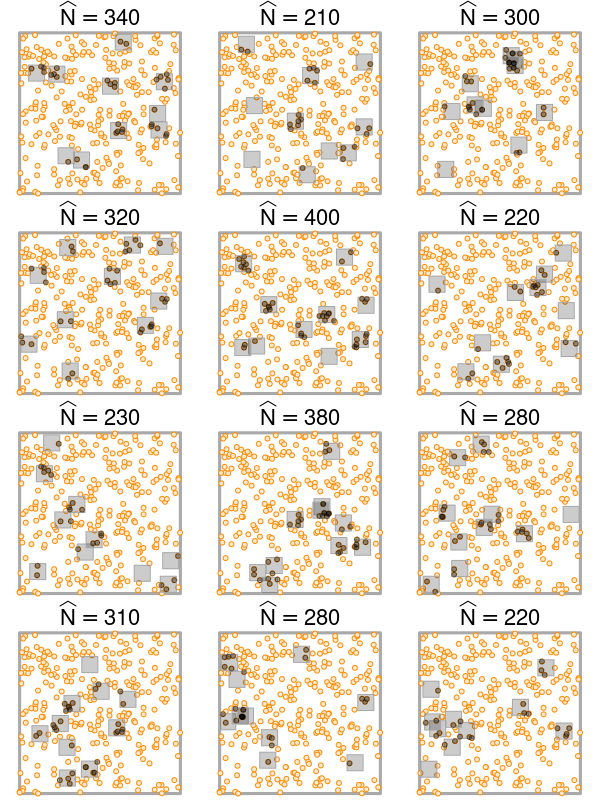

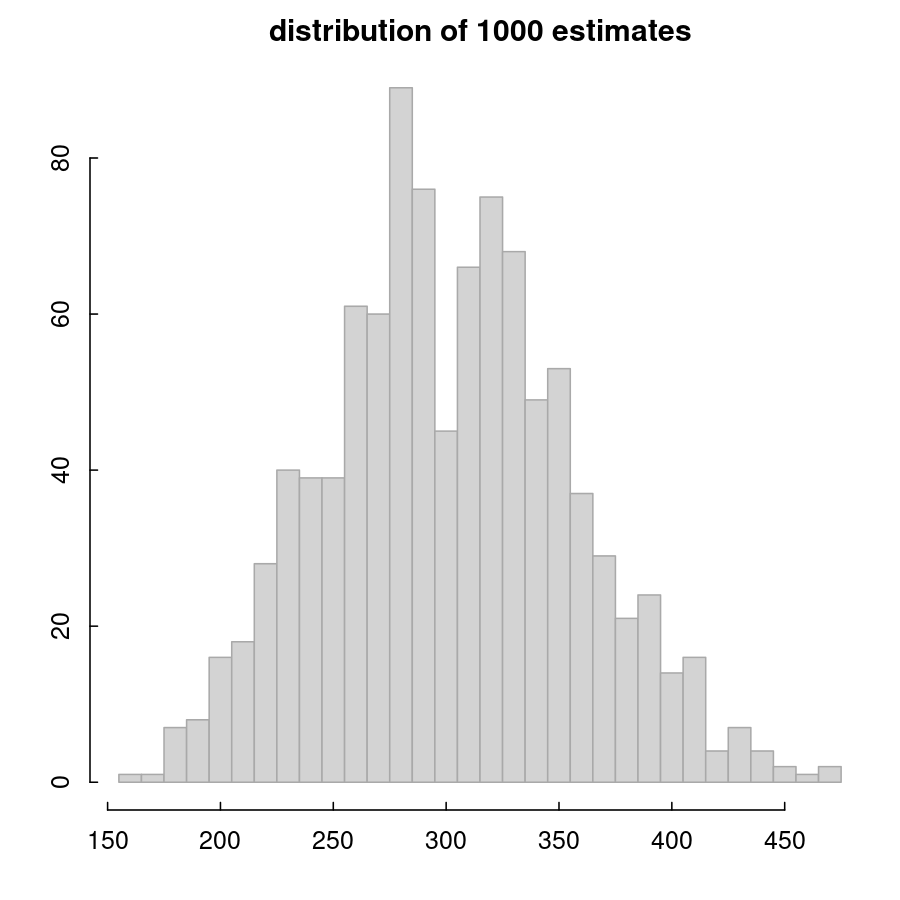

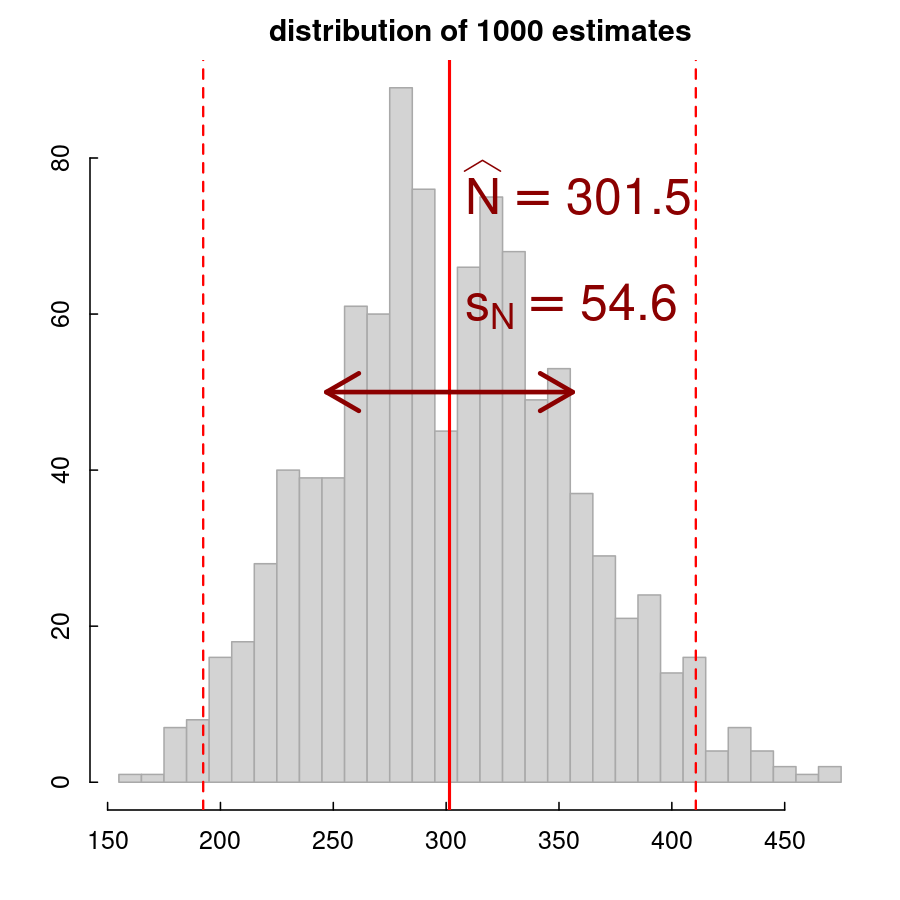

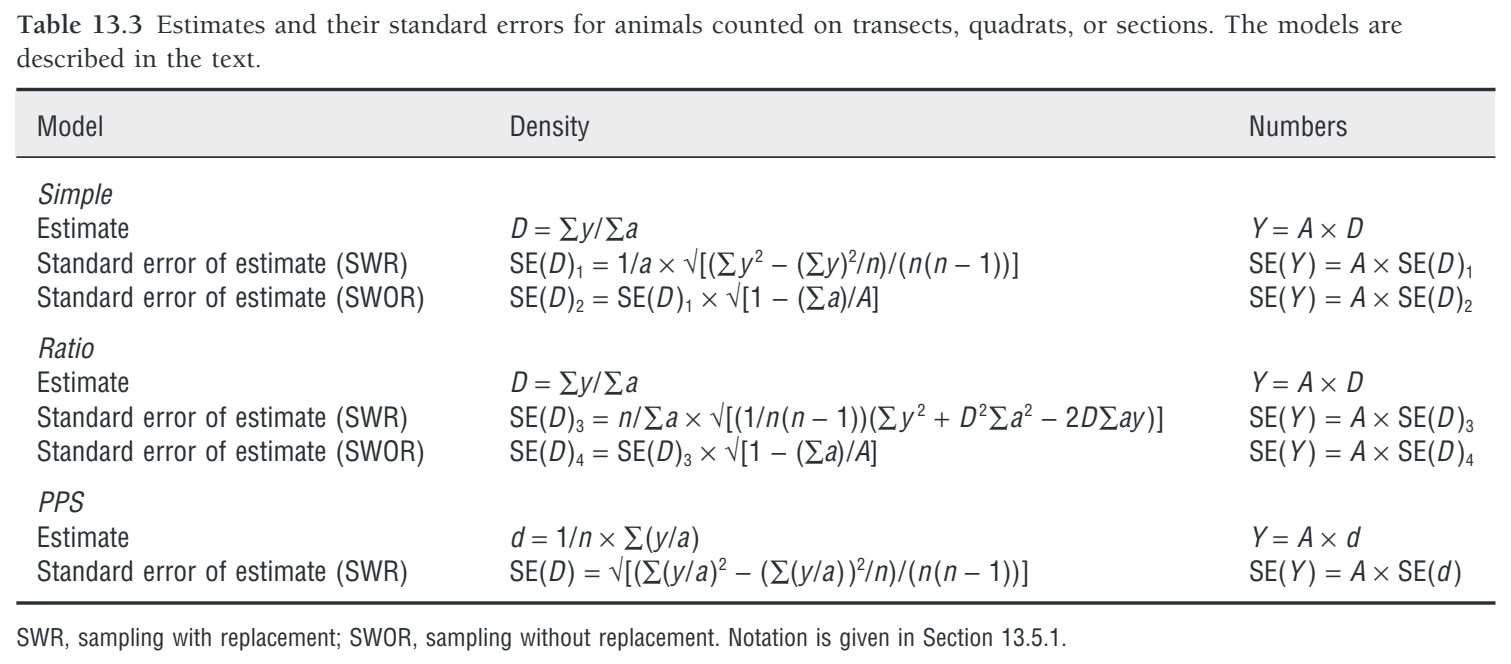

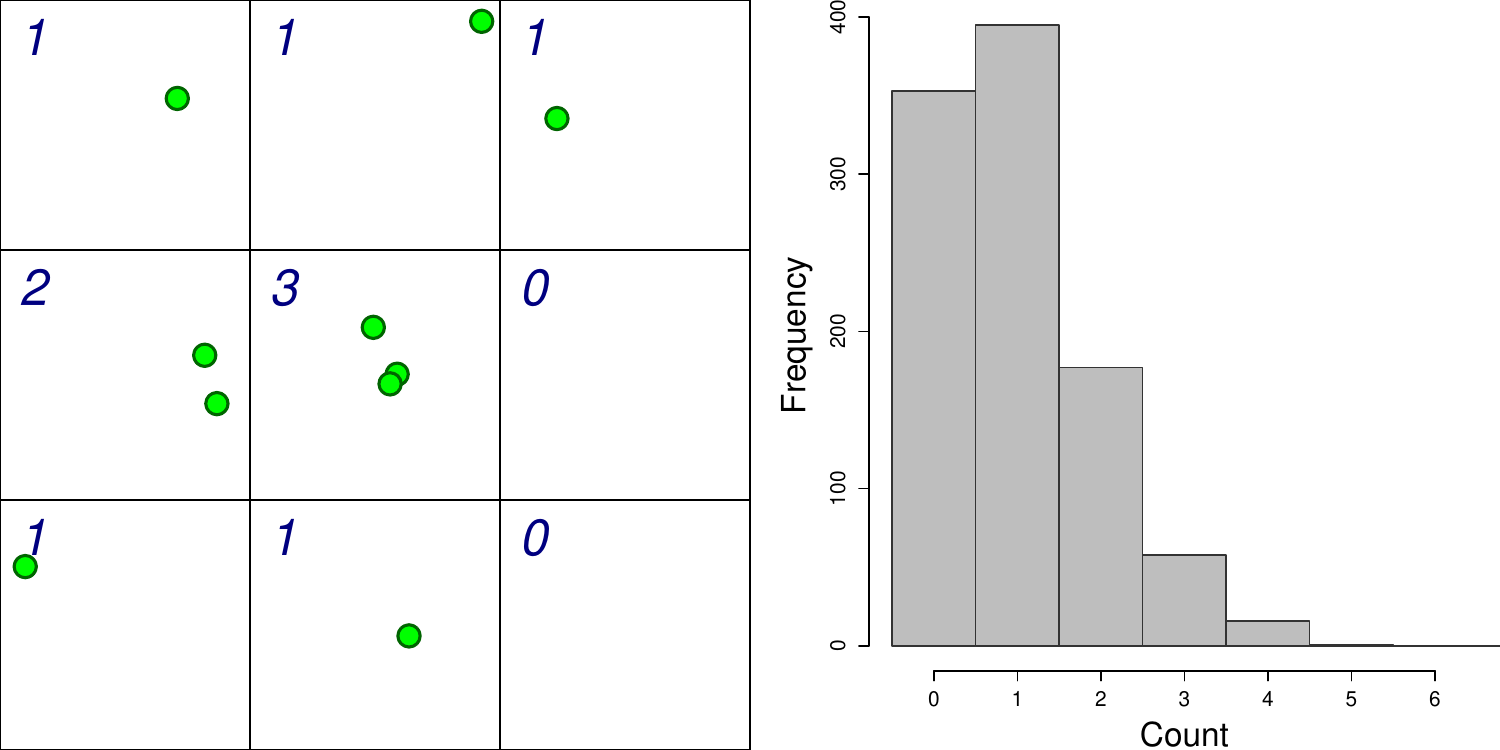

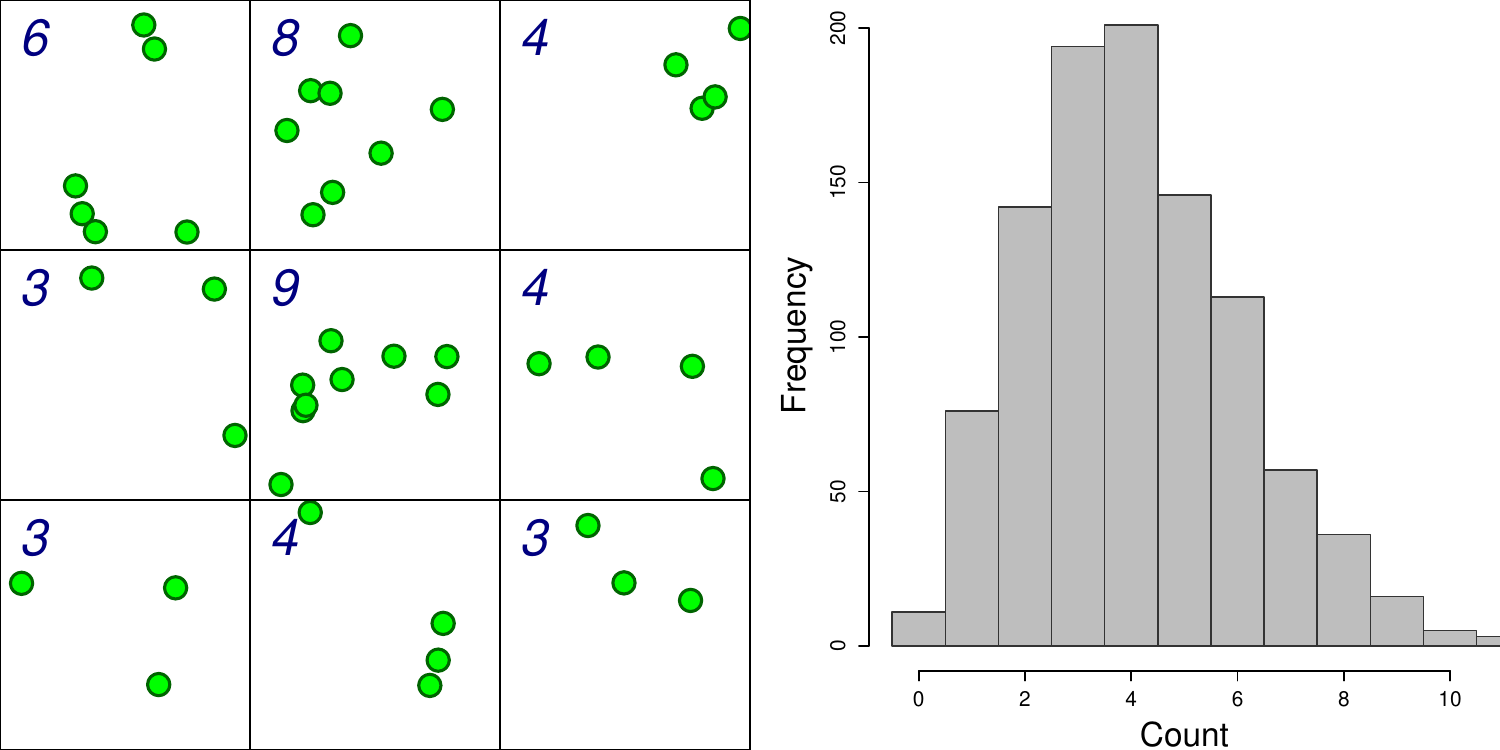

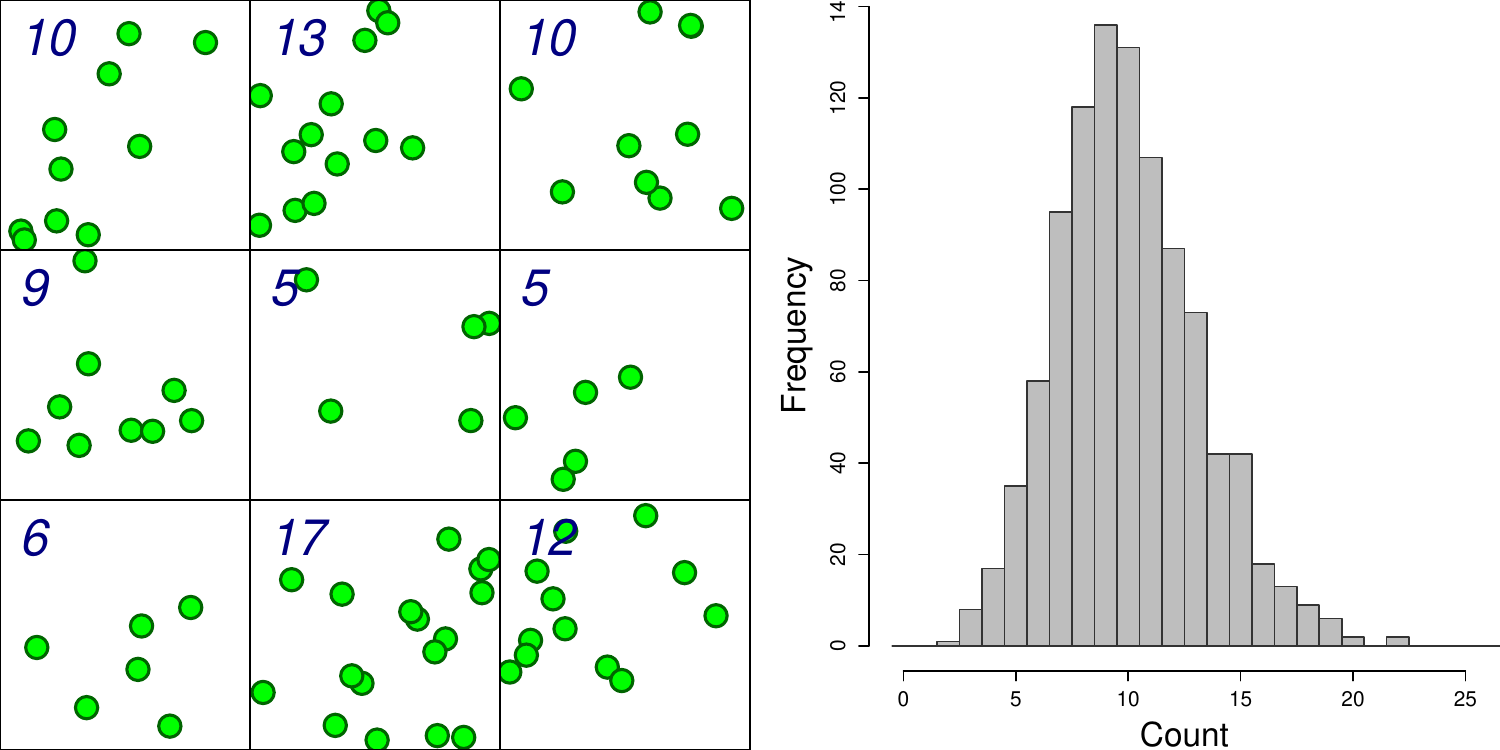

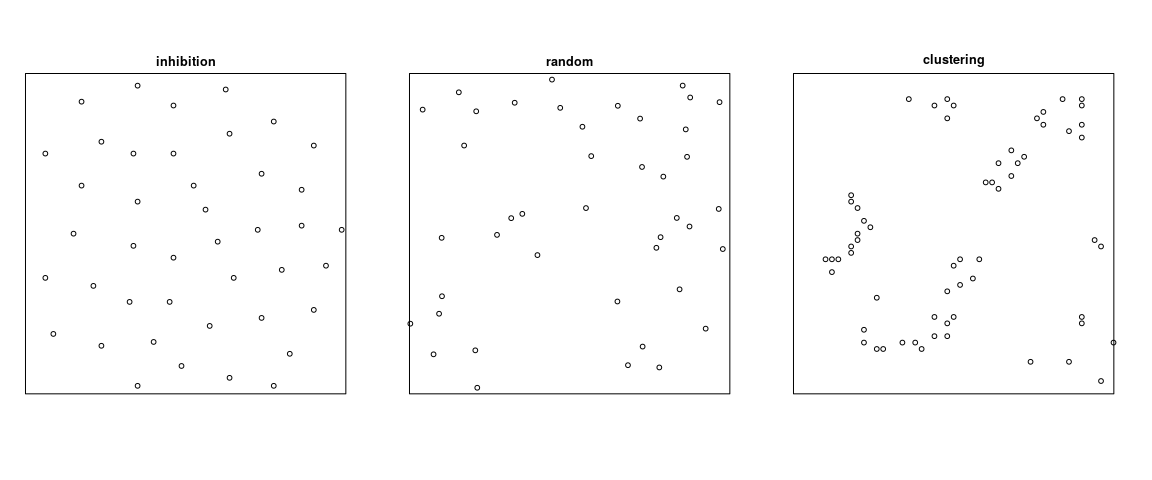

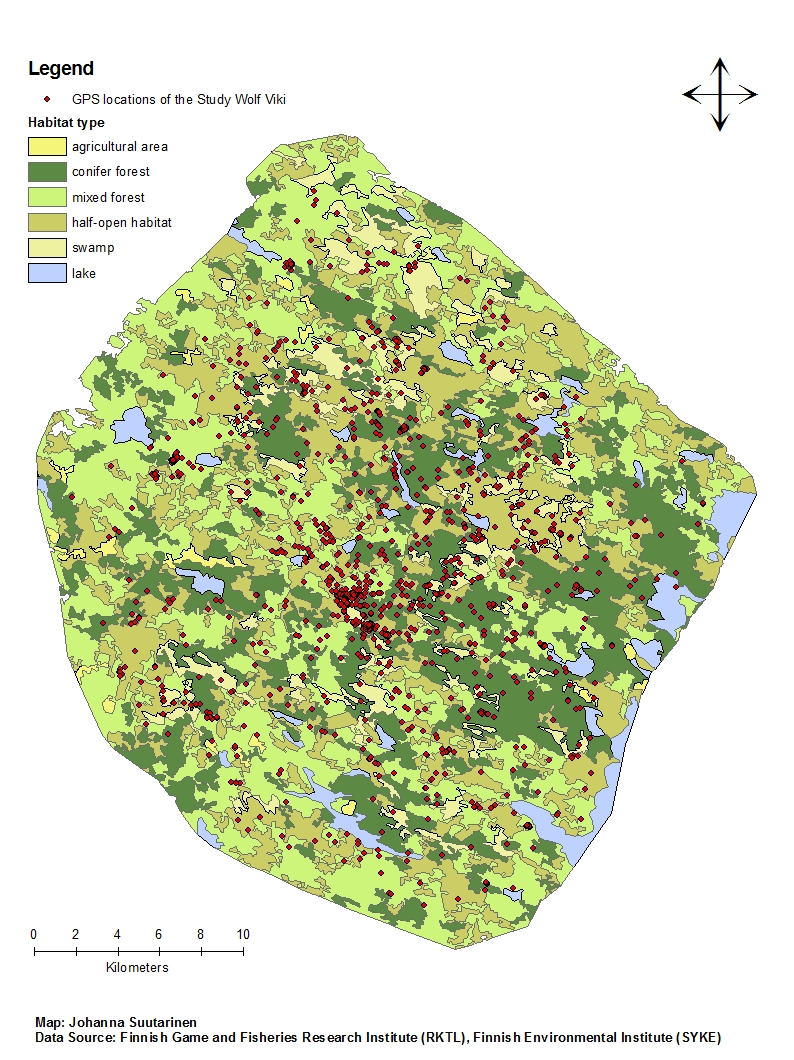

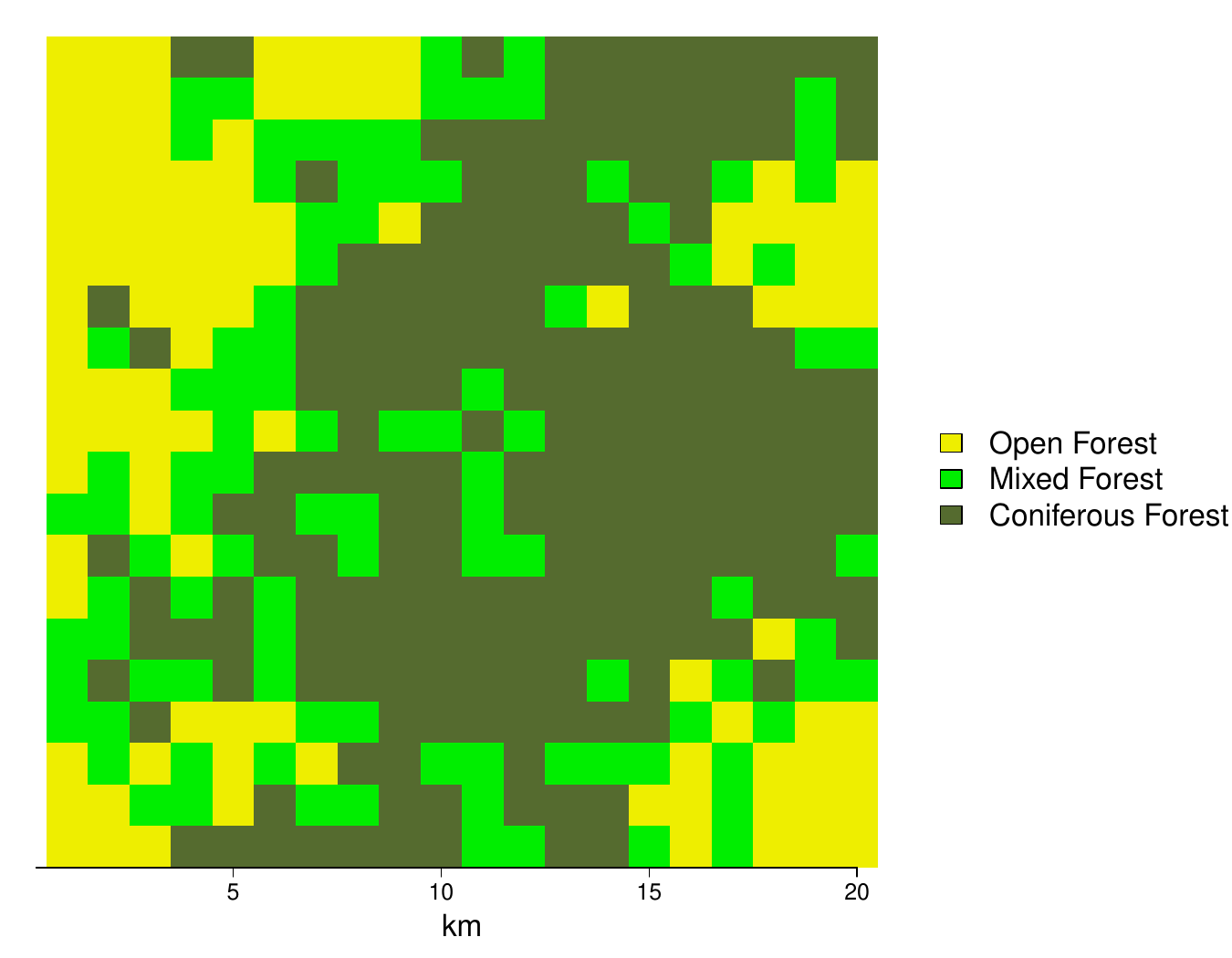

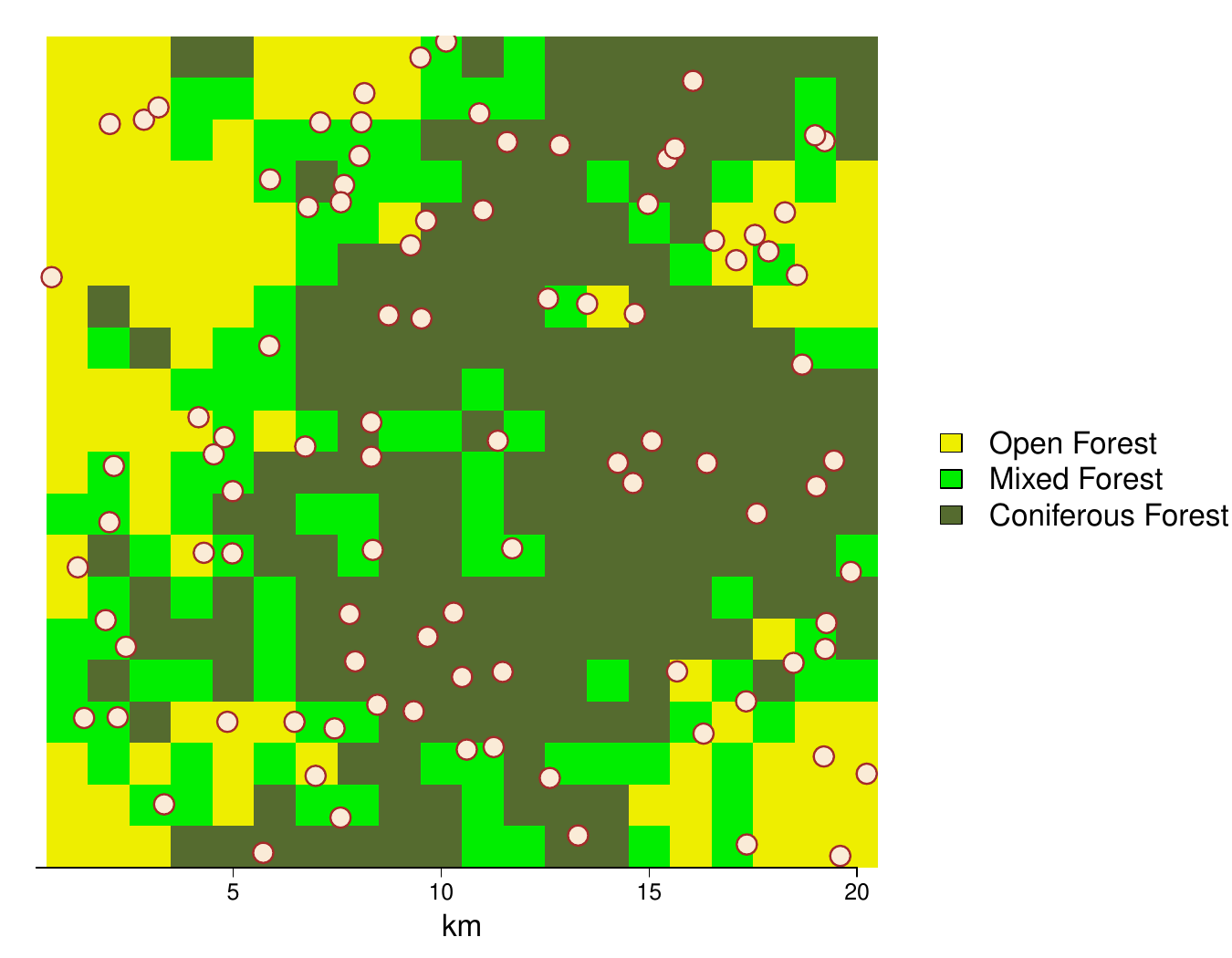

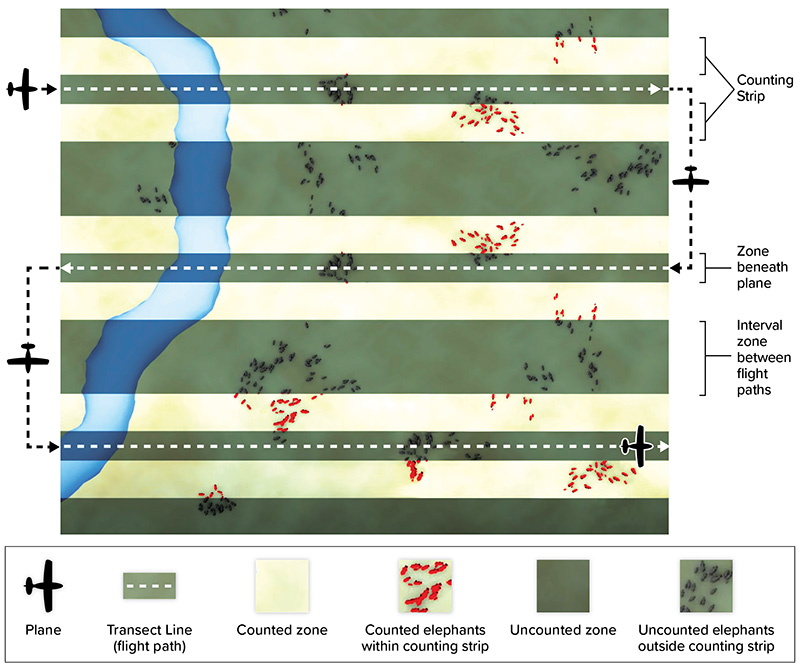

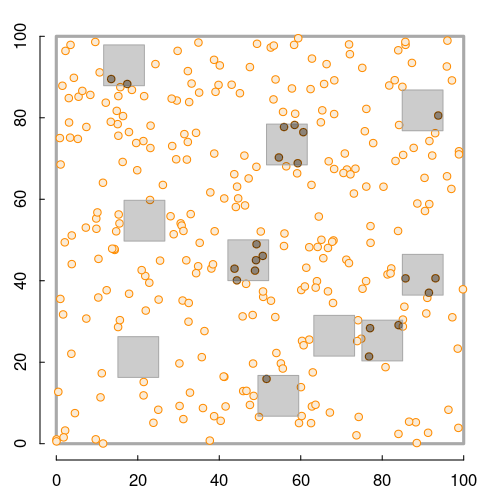

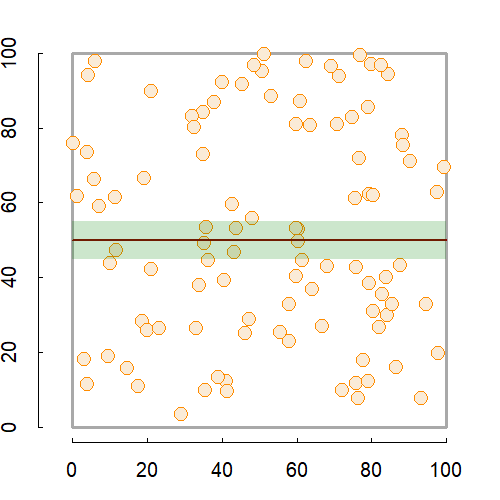

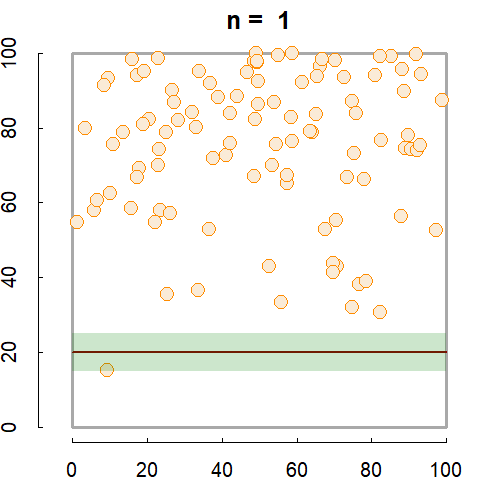

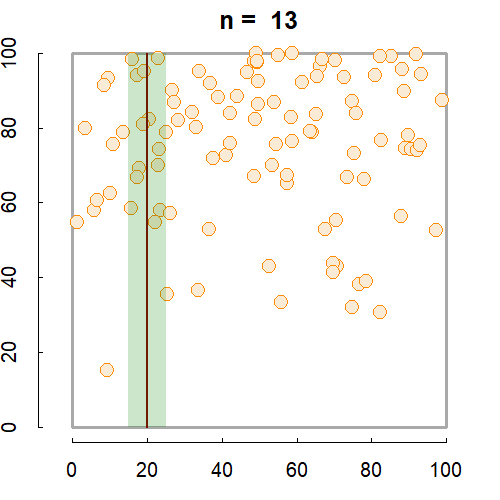

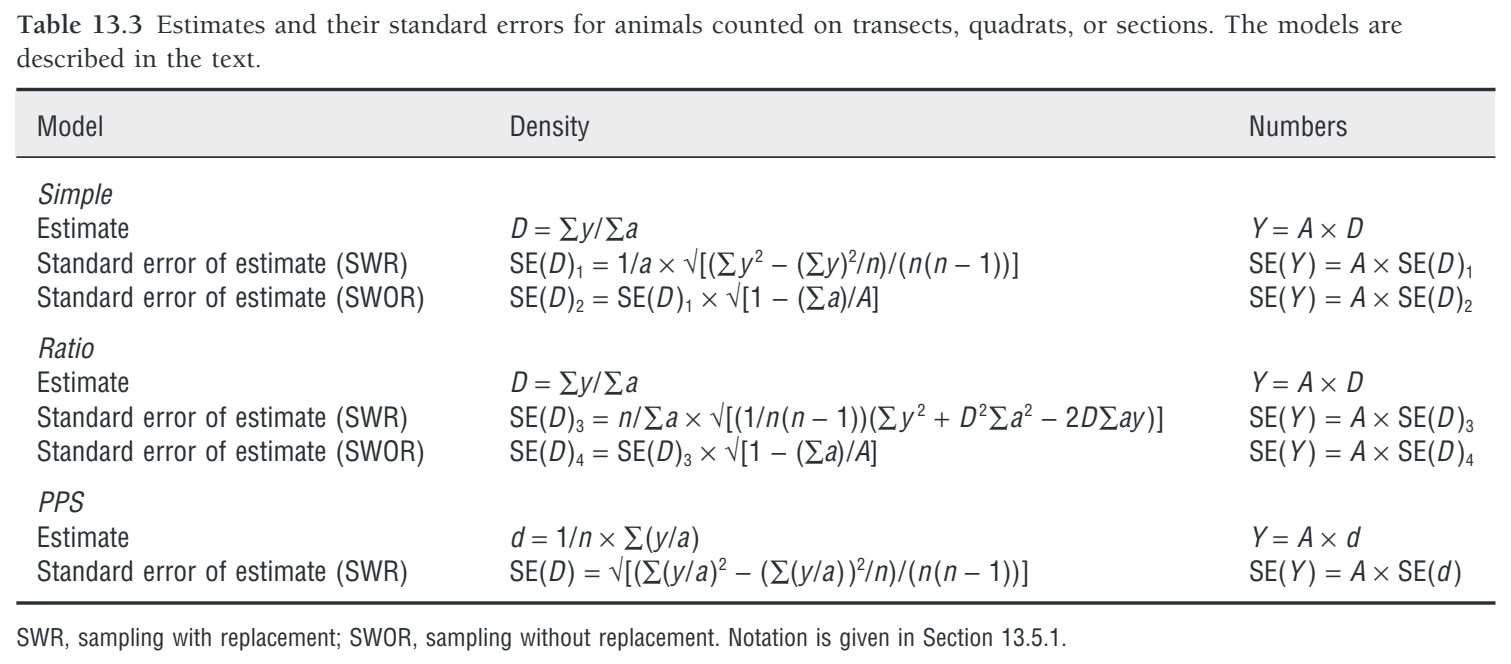

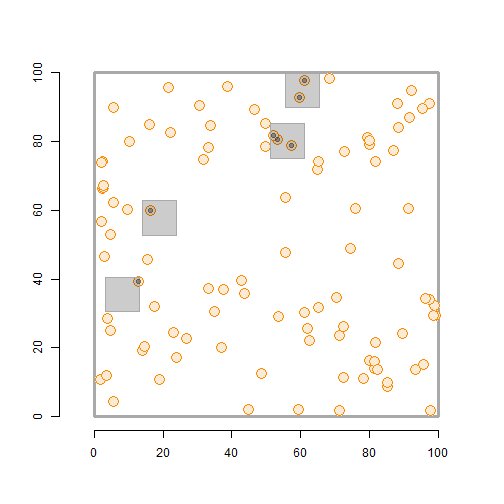

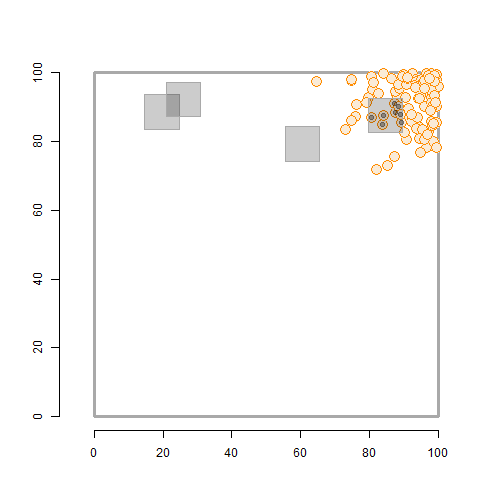

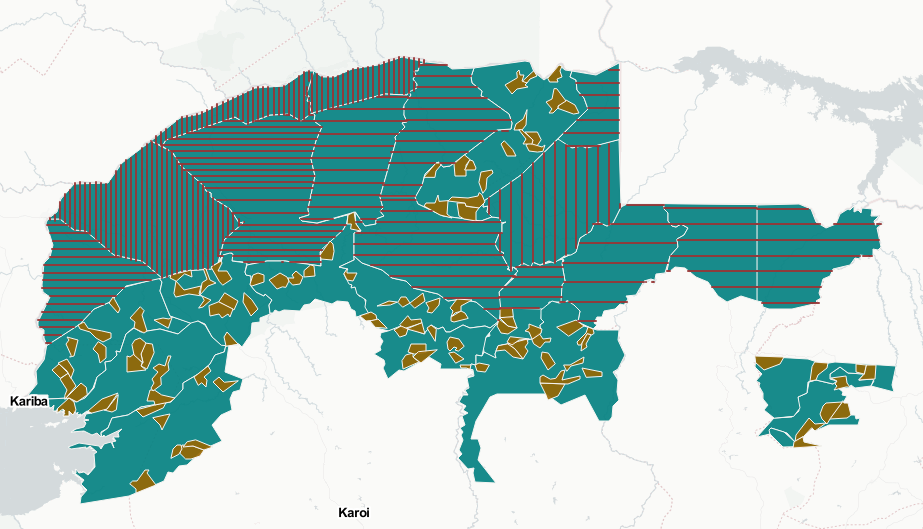

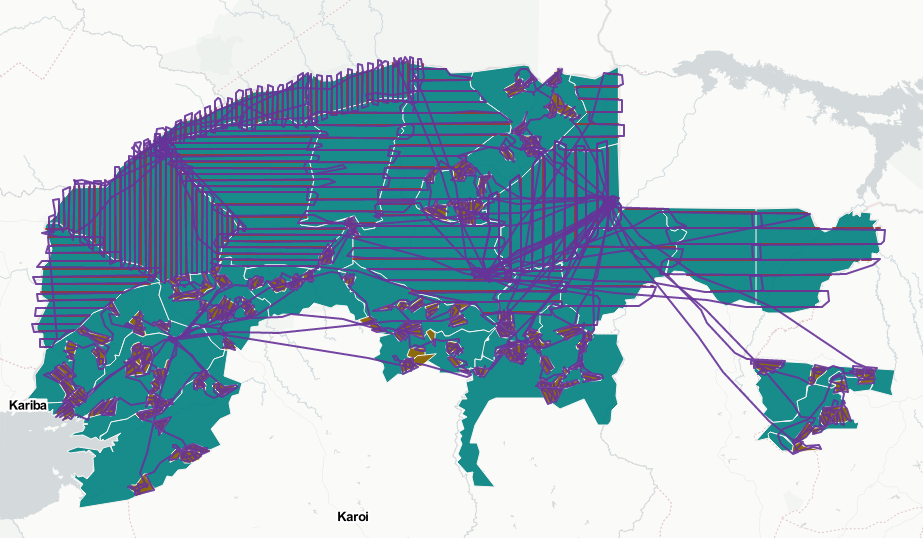

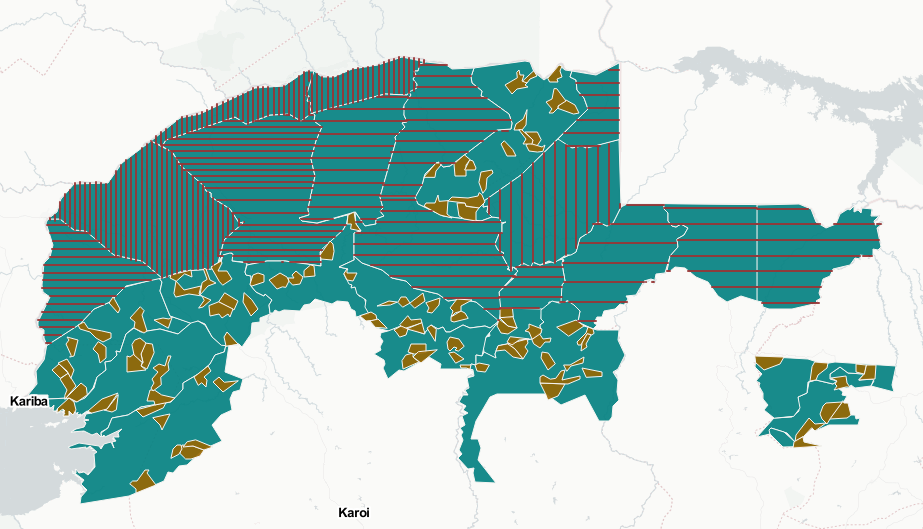

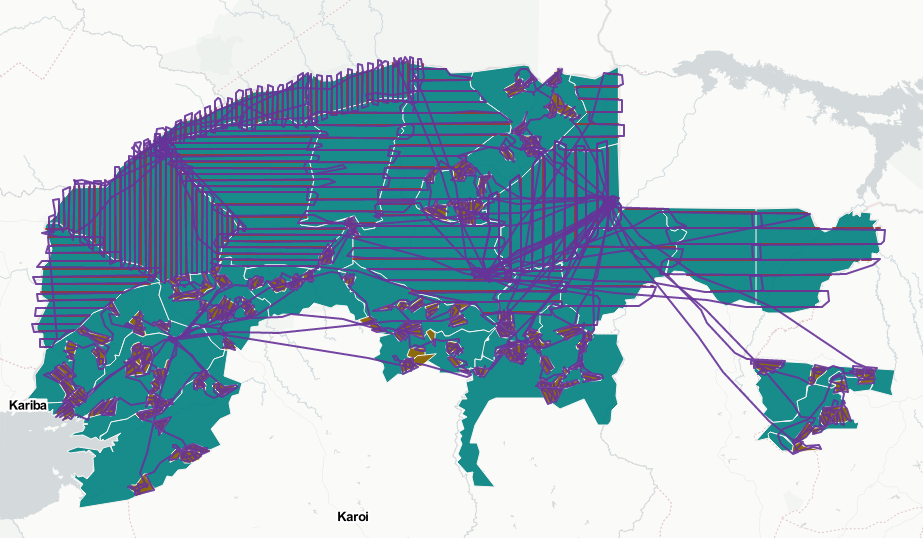

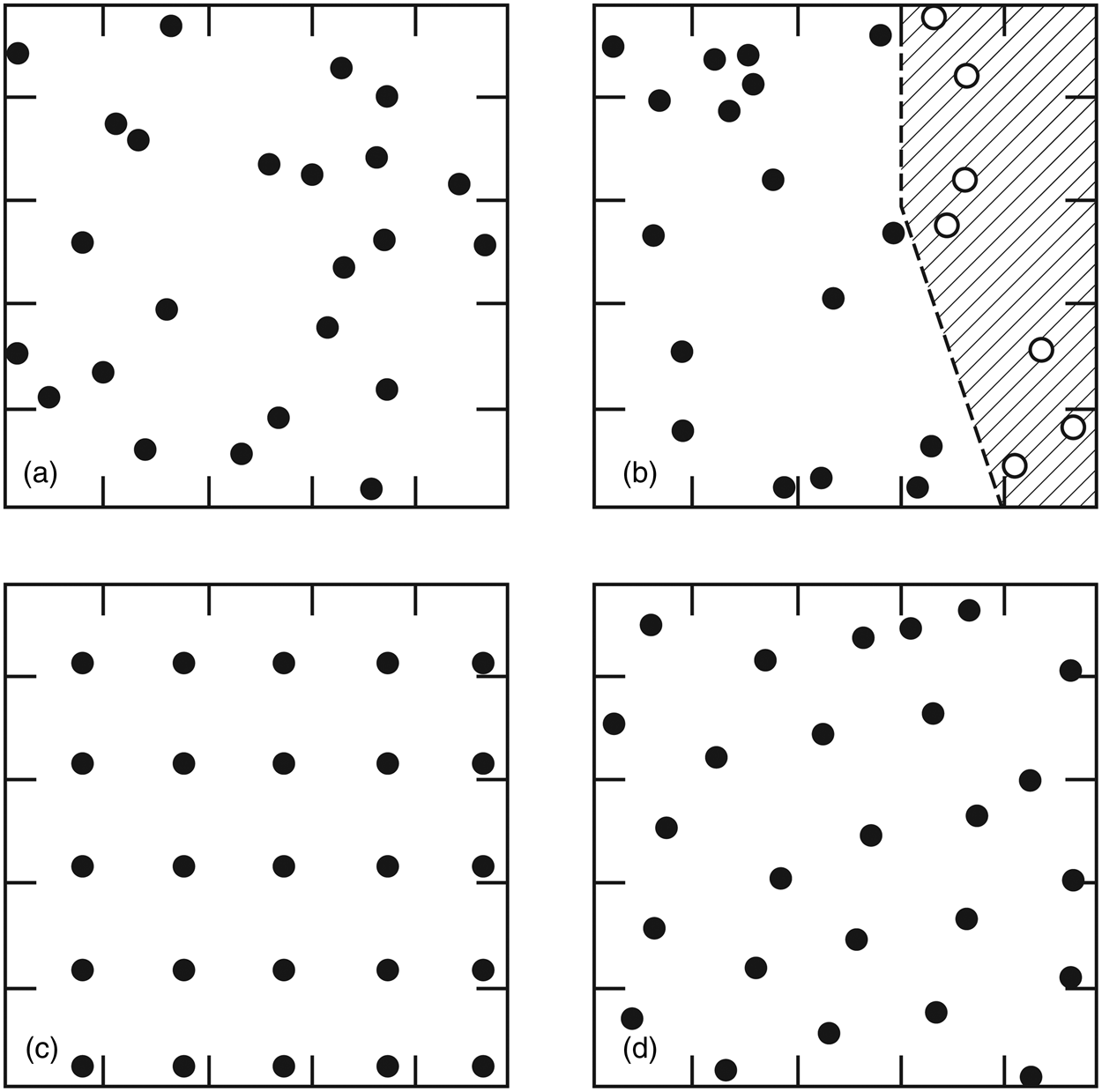

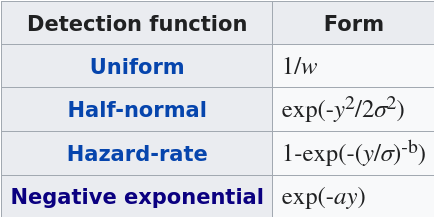

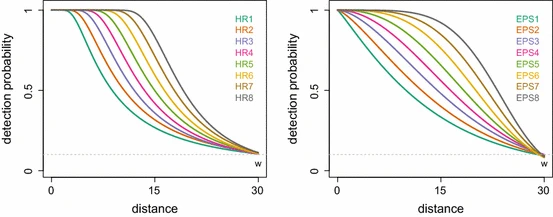

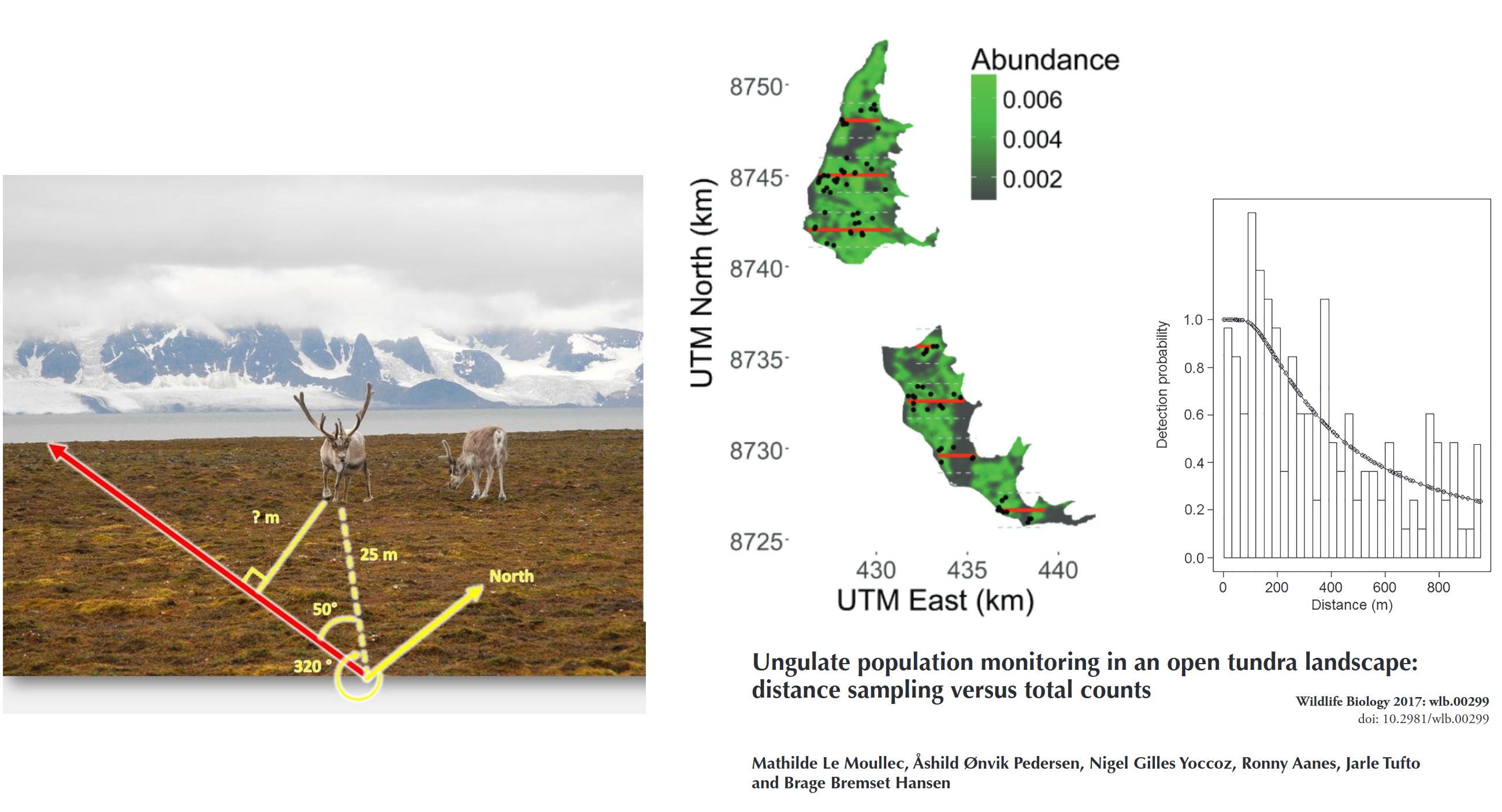

class: left, title-slide .title[ # .white[Counting Animals Part II: Sample Counts] ] .subtitle[ ## .white[EFB 390: Wildlife Ecology and Management] ] .author[ ### .white[Dr. Elie Gurarie] ] .date[ ### .white[September 11, 2025] ] --- <!-- https://bookdown.org/yihui/rmarkdown/xaringan-format.html --> ## Drawbacks of total counts / censusing .pull-left-60.large[ Expensive & labor-time intensive Impractical for MOST species / systems - need to ALL be **visible** - the **ENTIRE** study area needs to be survey-able Hard to assess precision ] .pull-right-40[  .center[**Hippos**] .small.grey[(Marc Mol/Mercury Press/Caters)] ] --- ### Is the great Elephant Census a Census? <iframe src="https://www.youtube.com/embed/imvehfydUpc?controls=0" width="900px" height="500px"> </iframe> --- class: inverse # Sample counts ### Simple idea: - count *some* of the individuals - extrapolate! -- ### In practice: - Necessarily - less **precise** due to **sampling error**. - BUT, if properly done, more **accurate** and **much less effort**. - Involves some (tricky) *statistics* and *modeling!* ### By Necessity: **Very Common** --- ## A random population .pull-left-60[  ] -- .pull-right-40[ ### Population density .large[$$N = A \times D$$] - `\(N\)` - total count - `\(A\)` - total area - `\(D\)` - overall density ] .red[***Which of these do we know?***] --- ## Sampling from the population .pull-left-60[  .center[**Squares**, aka, **quadrats**] ] -- .pull-right-40[ ### *Sample* density: `$$n_{sample} = \sum_{i=1}^k n_i$$` `$$a_{sample} = \sum_{i=1} a_i$$` `$$d_{sample} = {n_{sample} \over a_{sample}}$$` ] --- ### Sample vs. Population | Population | Sample --|:--:|:-- size | `\(N\)` | `\(n_s\)` area | `\(A\)` | `\(a_s\)` density | `\(D\)` | `\(d_s\)` "sample density" `\(d_s\)` is an *estimate* (guess) of total density: `$$\widehat{D} = d_s$$` -- ### True population: .green.large[$$N = A \times D$$] Population **estimate** (best guess for `\(N\)`): just replace true (unknown) density `\(D\)` with *sampling estimate* of density `\(d_s\)`: .red.large[$$\widehat{N} = A \times \widehat{D} = A \times d_s = A \times {n_s \over a_s}$$] --- .pull-left[ ## Example  ] ### Data .blue[10 quadrats; 10x10 km each] .blue[ `n = {0,0,5,0,3,1,2,3,6,1}`] .red[**note:** *variability / randomness!*] -- .pull-right[ ### Analysis .green[ `\(n_s = \sum n_i = 21\)` `\(d_s = \widehat{D} = {21 \over 10 \times 10 \times 10} = 0.021\)` `\(A = 100 \times 100\)` ] ] -- #### final estimate: .Large.green[ `$$\widehat{N} = \widehat{D} \times A = 100\times100\times0.021 = \textbf{210}$$`] --- ## What happens when we do this many times? .pull-left[  ] .pull-right[ Every time you do this, you get a different value for `\(\widehat{N}\)`.  ] --- ### Statistics .pull-left-60[ **Mean of estimates:** `$$\widehat{N} = 301.5$$` **S.D. of estimate:** `$$s_{\widehat{N}} = 54.6$$` .red[**standard error** (***SE***) = *standard deviation* of an *estimate*] **95% Confidence Interval:** `$$\widehat{N} \pm 1.96 \times SE = \{195-408\}$$` ] .pull-right-40[  ] .green[**note:** the 1.96 (basically 2) is the number of standard deviations [on either side] that captures 95% of a normal distribution.] -- Conclusion: this estimate is **accurate** (unbiased), but not very **precise** (big confidence interval). --- ## Estimating **precision** of the estimate **v. 1** **IF:** Total area covered is small: `\(a_s \ll A\)` (.green[.small[*Fryxell says < 15% coverage*]]) **OR: ** You are potentially resampling the same individuals (.green[.small[***Sampling With Replacement*** [SWR]]]) **And: ** samples are .blue[*distributed throughout the range*] - (.green.small[a BIG assumption to revisit!]) **Then:** -- .darkred[$$SE(\widehat{N}) = {A \over a} {\sqrt{\sum n_i} \over k}$$] - `\(n_i\)` is set of **sample counts** - `\(k\)` is the **number of samples**: `\(i =\{1,2,...,k\}\)` ) - `\(a\)` is the area of the sample frame - `\(A\)` is the total area --- ## In our example .Large.darkred[$$SE(\widehat{N}) = {A \over a} {\sqrt{\sum n_i} \over k}$$] - `\(n_i\)` is set of **sample counts** - `\(k\)` is the **number of samples**: `\(i =\{1,2,...,k\}\)` ) - `\(a\)` is the area of the sample frame raw counts: `n = {0,0,5,0,3,1,2,3,6,1}` -- .pull-left[ quantity | value ---|--- a | 10 A | 100² k | 10 `\(\sum n\)` | 21 ] -- .pull-right.darkred[ `$$\begin{align} SE &= 100² \times {\sqrt{21} \over 100 \times 10}\\ &= {\huge 54.8} \end{align}$$` ] --- ## Estimating **precision** of the estimate **v. 2** **IF:** Total area covered is small: `\(a_s \ll A\)` **OR:** You might be resampling the same individuals (.green[.small[***Sampling With Replacement*** [SWR]]]) **And:** .blue[*you don't know how they are distributed throughout the range*] - (.green.small[this is a MUCH BETTER assumption]) **Then:** -- `$$SE(\widehat{N}) = {A \over ka} \sqrt{ {\sum n_i^2 - (\sum n_i)^2/k) \over k(k - 1)}}$$` .center[**Much uglier! But more useful!**] --- ## In our example .Large.darkred[$$SE(\widehat{N}) = A \sqrt{ {\sum n_i^2 - (\sum n_i)^2/k) \over k(k - 1)}}$$] raw counts: `n = {0,0,5,0,3,1,2,3,6,1}` .pull-left-40[ quantity | value ---|--- a | 10 A | 100² k | 10 `\(\sum n^2\)` | 85 `\((\sum n)^2\)` | 441 ] .pull-right-60[ ``` r n = c(0,0,5,0,3,1,2,3,6,1) a <- 10; A <- 100^2; k <- 10 (A/(k*a) * sqrt( (sum(n^2) - (sum(n)^2/k)) / (k*(k-1)) )) ``` ``` ## [1] 67.41249 ``` .large.center.darkred[ `\(SE(\widehat{N}) = 67.4\)` ] ] --- class: inverse ## In-class Experiment #### Some facts - There are .red[**95**] students in this class (+/- 1 !). - Each student has a first name, composed of some number *n* of letters - There are a total of .red[***N***] letters in all the first names of the class. #### The challenge: - break into groups of **8-12** and record the number of letters in the first names of all the people in your group - estimate the total number of letters in the names of the entire class. #### formulae .center.red[ `\(\widehat{N} = A {\sum n \over ak}; \,\,\,\,\, SE(\widehat{N}) = A \sqrt{ {\sum n_i^2 - (\sum n_i)^2/k) \over k(k - 1)}}\)` ] --- # Combining estimates .pull-left[ If you have multiple sub-count estimates (e.g. one for each of `\(r\)` sub-region): .darkred[ - `\(\widehat{N_1}, \widehat{N_2}, ..., \widehat{N_r},\)` ] and each estimate has a standard error: .darkred[ - `\(SE(\widehat{N_1}), SE(\widehat{N_2}), ..., SE(\widehat{N_r})\)` ] Then ... ] -- .pull-right[ ... the **total** estimate will be: .darkgreen[ `$$\widehat{N} = \sum_{i = 1}^r \widehat{N_i}$$` ] and the standard error will be: .darkgreen[ `$$SE(\widehat{N}) = \sqrt{\sum_{i = 1}^r SE(\widehat{N_i})^2}$$` ] ] -- .red.center[*Is this estimate more precise?*] --- ## Some other formulae from Fryxell book Chapter 12:  These are used when **sampling areas** are unequal, and account for differences when sampling **with replacement** or **without replacement**. --- ### Poisson process Models *counts*. If you have a perfectly random process with mean *density* (aka *intensity*) 1, you might have some 0 counts, you might have some higher counts. The *average* will be 1:  --- ### Poisson process Here, the intensity is 4 ...  --- ### Poisson process ... and 10. Note, the bigger the intensity, the more "bell-shaped" the curve.  Here's the formula of the Poisson Distribution: `\(\!f(k; \lambda)= \Pr(X{=}k)= \frac{\lambda^k e^{-\lambda}}{k!}\)` --- ### Poisson distribution holds if process is truly random ... not **clustered** or **inhibited**  If you **sample** from these kinds of spatial distributions, your standard error might be smaller (*inhibited*) or larger (*clustering*). This is called *dispersion*. --- ### Also ... densities of animals depend on habitat! .pull-left-60[ **Wolf habitat use**  ] .pull-right-40[ If you look closely: - No locations in lakes - Relatively few in bogs / cultivated areas. - Quite a few in mixed and coniferous forest ] --- ## Imagine a section of forest ... .pull-left-60[ ] --- ## ... with observations of moose .pull-left-60[ ] .pull-right-40[ **How can we tell what the moose prefers?** Habitat | Area | n | Density ---:|:---:|:---:|:--- open | 100 | 21 | 0.21 mixed | 100 | 43 | 0.43 dense | 200 | 31 | 0.16 **total** | 400 | 95 | 0.24 ] .blue[Knowing how densities differ as a function of **covariates** can be very important for generating estimates of abundances, increasing both **accuracy** and **precision**, and informing **survey design**.] --- ### Sample frames need not be **squares** .pull-left-50[  ] .pull-right-50[ ## Transects Linear strip, usually from an aerial survey. Efficient way to sample a lot of territory. If "perfect detection", referred to as a **strip transect**. Statistics - essentially - identical to quadrat sampling. ] .footnote[https://media.hhmi.org/biointeractive/click/elephants/survey/survey-aerial-surveys-methods.html] --- ### General principle: The bigger the sample, the smaller the error. .pull-left[ `\(k\)` sample frames with counts `\(n_i\)`, each of area `\(a\)` out of large area `\(A\)` total area sampled is much less than total Area: `$$a_s = \sum_{i=1}^k a_i = k \times a \ll A$$` ] .pull-right[ then: $$\widehat{N} = {A \over a_s} \sum c_i = {A \over k \times a} \sum n_i $$ `$$SE(\widehat{N}) = {A \over a_s} \sqrt{\sum n_i} = {A \over a} {\sqrt{\sum n_i} \over k}$$` ] -- .pull-left-40[] .pull-right-60.small[ .darkred[ - `\(\widehat{N} = {100 \times 100 \over 10 \times 10 \times 10} \times 21 = 210\)` - `\(SE(\widehat{N}) = {100 \times 100 \over 10 \times 10 \times 10} \sqrt{21} = 45.8\)` - `\(95\% \,\, C.I. = \widehat{N} \pm 1.96 \times SE(\widehat{N}) = \,\, ...\)` - Coefficient of Variation = `\({SE(\widehat{N}) \over \widehat{N}} = \,\, ...\)` ]] --- class: small ## Example - single transect, simple formula .center[ `\(\large SE(\widehat{D}) = {1 \over a}\sqrt{\sum n_i}\,\,\,\)` and `\(\large SE(\widehat{N}) = A \times SE(\widehat{D})\)` ] .pull-left[ .red.large.center[ `\(n = 8\)`; `\(a = 1000\)`; `\(A = 10,000\)` ] <!-- --> ] -- .pull-right[ #### point estimates `$$\widehat{d} = 8/1,000 = .008$$` `$$\widehat{N} = \widehat{d} \times A = 80$$` #### standard errors: `$$SE(\widehat{D}) = {\sqrt{8} \over 1000} = 0.0028$$` `$$SE(\widehat{N}) = 0.0028 \times 10,000 = 28.28$$` #### final abundance estimate: .darkred[ `$$\widehat{N} = 80$$` `$$95\%\, CI(\widehat{N}) = \widehat{N} \pm {1.96 \times SE(\widehat{N})} = \{24.5, 135\}$$` ] ] --- .pull-left[ #### This is why you *want* lots of transects: <!-- --> To capture variation! ] -- .pull-right[ #### This is also why you go along the **gradient** of variation: <!-- --> .red[**gradient**] - means slope of (steepest) change ] --- ## More complex formulae from Fryxell book Chapter 12:  These are used when **sampling areas** are unequal, and account for differences when sampling **with replacement** or **without replacement**. --- ## Simple-SWR - **Simple:** Equal sized sampling frames `\(a_i\)` all equal - **SWR:** Sampling 'with replacement', i.e. frames *OVERLAP*; some individuals counted more than once.. $$SE(\widehat{D}) = {1 \over a_i \sqrt{k(k-1)}} \times \sqrt{\sum n_i^2 - {\left(\sum n_i \right)^2 / k}} $$ `$$SE(\widehat{N}) = A \times SE(\widehat{D})$$` variable | meaning | in book :---:|:---:|:---: `\(k\)` | number of units sampled | .green[*n*] `\(a_i\)` | the area of a *single* unit | .green[*a*] `\(n_i\)` | an individual sample count |.green[*y*] `\(A\)` | total study area | --- ## Example: .pull-left[ <!-- --> .red[ **data:** counts = {2,3,1,1} a = 100; A = 10,000] ] -- .pull-right[ - `\(\widehat{N} = {2+3+1+1 \over 100} \times 10,000 = 70\)` - `\(SE(\widehat{N}) = {10,000 \over 100 \times \sqrt{4 \times 3}} \times \\\sqrt{(1 + 1 + 9 + 4) - {(1+1+3+2)^2\over 4}}\)` - `\(SE(\widehat{N}) = {50 \over \sqrt{3}} \times \sqrt{15 - {49 \over 4}} = 48\)` - `\(\widehat{N} = 70; \,\, 95\% \textrm{CI} = (-23, 164)\)` .green[*Anything wrong with this confidence interval?*] ] --- ## Example: More Heterogeneity .pull-left[ .darkred[ **data:** - counts = {0,0,0,8} - a = 100; A = 10,000 ] <!-- --> ] -- .pull-right[ .green[ `\(\widehat{N} = 80\)` ] `\(SE(\widehat{N}) =\\ {50 \over \sqrt{3}} \times \sqrt{{(0+0+0+8)^2 - {(0+0+0+8^2) \over 4}}} = \\ = {50 \over \sqrt{3}} \times \sqrt{48} = {50 \over \sqrt{3}} {4\sqrt{3}} = 200\)` .green[ `$$95\% \textrm{CI} = (-130, 470)$$` ] .darkred[**Enormous confidence intervals, because of enormous variability in samples!**] ] --- ## Simple - SWOR - **SWOR:** Sampling *without* replacement, i.e. design guarantees no individual is counted more than once. `$$SE(\widehat{D_{swor}}) = SE(\widehat{D_{swr}}) \times \sqrt{1-{a / A}}$$` The larger the proportion sampled (*coverage*) - the smaller the **sampling error.** -- ## Ratio (SWR/SWOR) **Ratio**: unequal sample frames .blue[(e.g. both hula hoops and meter squares)]. - `\(\widehat{D} = \sum n_i / \sum y_i\)` (same as before) - Standard errors: more complicated ... see formulae. --- ### **Stratified sampling** for more efficient estimation  Sample more intensely in those habitats where animals are more likely to be found. Intensely survey .orange[**blocks**] where detection is more difficult. .footnote[https://media.hhmi.org/biointeractive/click/elephants/survey/survey-aerial-surveys-methods.html] --- ### **Stratified sampling** for more efficient estimation  Actual elephant flight paths, .footnote[https://media.hhmi.org/biointeractive/click/elephants/survey/survey-aerial-surveys-methods.html] --- ### **Stratified sampling** .pull-left[] .pull-right[] **Stratification** is used to optimize **effort** and **precision**. Aircraft cost thousands of dollars per hour! (In all of these comprehensize surveys - *design* takes care of **accuracy**). --- ### Sampling strategies .pull-left[] .pull-right[ (a) simple random, (b) stratified random, (c) systematic, (d) pseudo-random (systematic unaligned). Each has advantages and disadvantages. See also: *Adaptive Sampling* ] --- ### Detections usually get *worse* with distance! .pull-left-30[   ] .pull-right-70[ ## Distance Sampling The statistics of accounting for visibility decreasing with distance  ] --- ## Example reindeer in Svalbard  .large[ **Estimated detection distance**, compared to **total count**, incorporated **vegetation modeling**, computed **standard errors**, concluded that you can get a 15% C.V. for 1/2 the cost.] --- ## Example Ice-Seals  --- ## Example: Flag Counting at Baker  --- ## Looks like this  --- ## Nice video on counting caribou https://vimeo.com/471257951